While building a production RAG system using Gemini embeddings + Pinecone, everything worked fine—until one day it didn’t.

Overnight, the model we were using (gemini-embedding-004) stopped being supported and was replaced with a new version. At first, this seemed harmless. But soon our entire retrieval pipeline started failing.

What Actually Broke

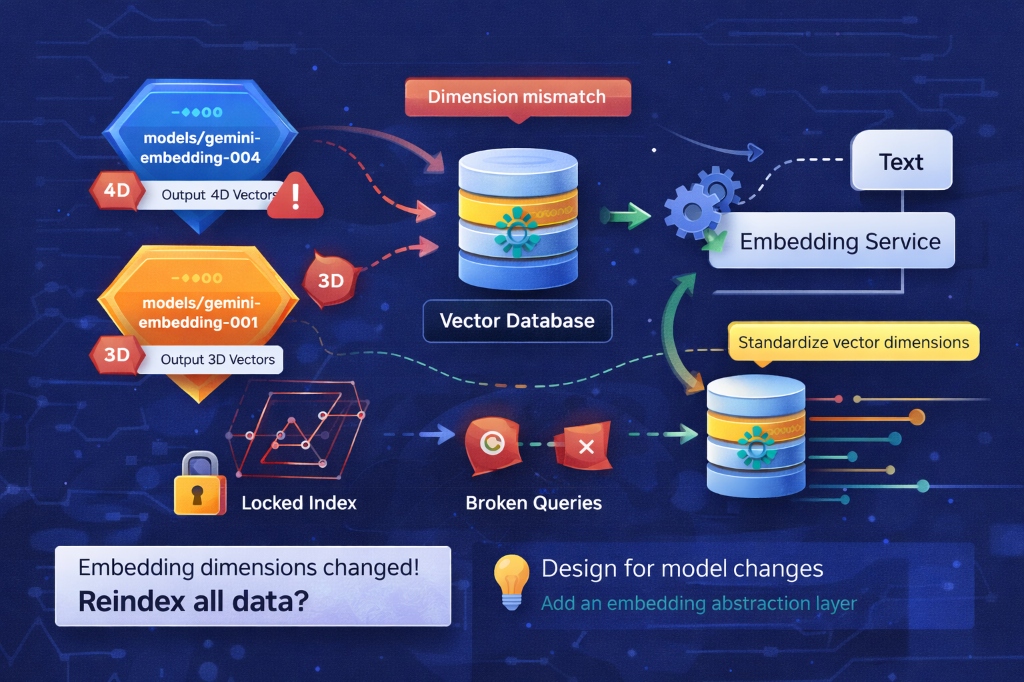

The real issue wasn’t the model name—it was the embedding dimension.

Our Pinecone index was created with a fixed vector size. When Gemini changed the embedding model, the output dimension changed as well. Pinecone does not allow changing index dimensions, so:

- Existing vectors became incompatible

- Queries started failing

- The only option was to create a new index and reindex all data

In production, that’s painful.

Why This Happens

Embedding providers continuously improve models:

- Better accuracy

- Lower latency

- Lower cost

But embedding dimensions are not guaranteed to stay stable. This isn’t a Gemini-only problem—any provider can change dimensions at any time.

Assuming “this dimension will work forever” is a mistake.

How We Solved It

Instead of trying to prevent change, we designed for it.

1. Fixed index dimensions by architecture, not provider

We chose a single dimension for our vector database and treated it as a system contract.

2. Added an embedding abstraction layer

All embedding calls now go through one internal service. If a model changes, we adapt there—without touching Pinecone.

3. Used namespaces for isolation

Each company’s data lives in its own namespace, making migrations safer and easier.

4. Planned for reindexing

We accepted that reindexing is unavoidable and built workflows that allow it without downtime.

Key Takeaway

Embedding models will change again. Indexes will break again.

The real solution isn’t finding a “stable” model—it’s building a system that doesn’t panic when models evolve.

Once we redesigned for change, model updates stopped being emergencies and became routine maintenance.